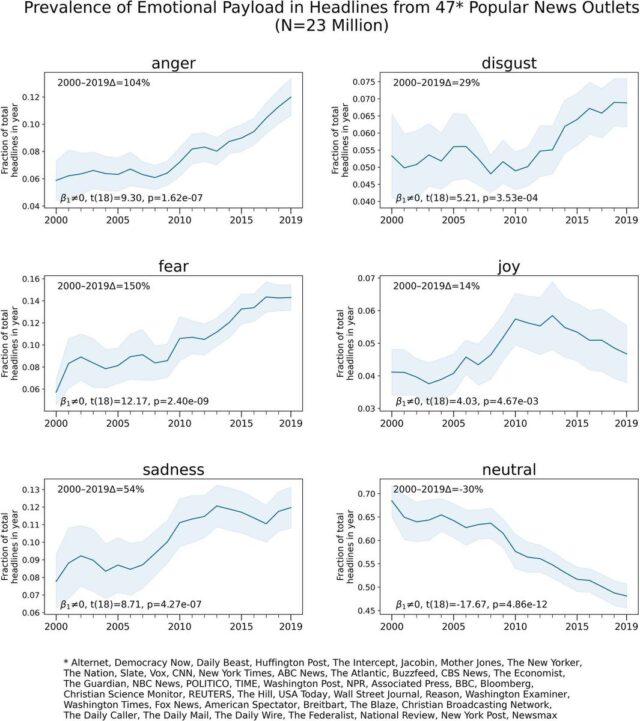

Recently, this graph made the round on Twitter. It shows how news headlines have been getting more emotionally intense over the years: They appear to be written specifically to trigger anger, fear, etc.

Now I can’t speak to the validity of the data here, but there are a few things I find noteworthy.

First, this has been an ongoing trend for decades. There had already been a body of evidence of this happening in both print and TV even back when I got my masters in media studies in the early 2000s, and it wasn’t particularly new then. If memory serves, it was attributed, among other things, to the advent of cable TV. Which intuitively makes sense insofar as TV is concerned, public TV and commercial TV runs on fundamentally different business models and incentives. If you sell ads, you increasingly live off of “engagement”, i.e. how much time and attention viewers/readers spend on your product.

Second, I was wondering if there was anything that changed during this timeframe analyzed here. And quite a few things did, right? The political climate got a lot more radical in the US (which is the focus of this study), with Fox News turning things up to 11 and the Trump election and everything that led there. New, and more radical, media outlets popped up.

Third, social media really took off and changed the way we consume and share news fundamentally. The rise of social media, new media outlets, and the radicalization of discourse would seem strongly interrelated.

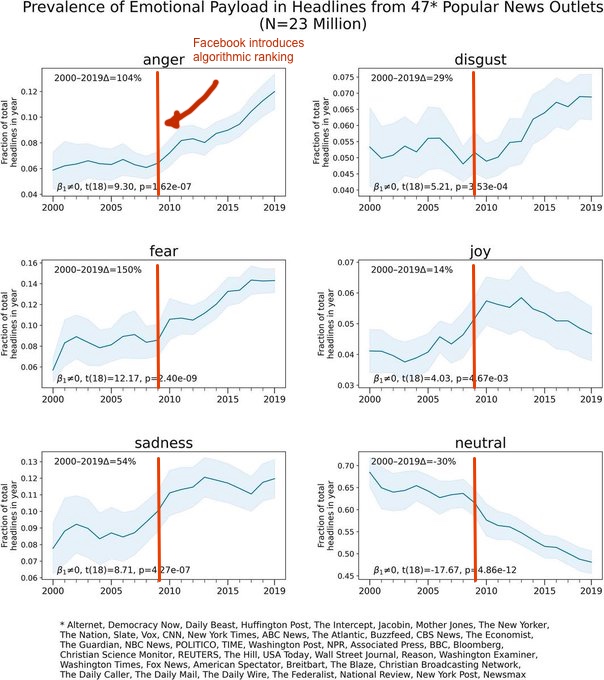

Fourth, one specific aspect here strikes me as fundamental: The introduction of algorithmic recommendations on social media combined with behavioral tracking and content targeting across the web. I’m calling out Facebook because it was already a, if not the, dominant social media platform in 2009 when they ditched their chronological content delivery over to algorithmic recommender systems.

Here’s the graph above with a red line marking 2009, the year Facebook went all in on algorithmic recommendations:

How we consume and share news has a profound influence on our public sphere and on the way we shape society. The web is also made by people, with intent; if it doesn’t work the way we made it, we can change it. Just like that.

I think we should take a very close look at which aspects of the way the web works right now are actually working and which aspects are broken — and breaking more things every day. There’s a lot that algorithmic recommenders can do for us, but as long as they’re couple to boosting engagement no matter what, we’re in for a bumpy ride.

Header image: Yasmin Dwiputri & Data Hazards Project / Better Images of AI / Managing Data Hazards / CC-BY 4.0