Openness has been a founding pillar of the web: open source, open data, free culture all have been driving forces for a thriving internet. Increasingly, we’re seeing the limits and downsides that openness can have.

These last few days, a leaked Facebook/Meta memo has been making the rounds. In it, Facebook engineers outline how unprepared Facebook is to control data flows as is increasingly required by regulations around the world. (Vice article, FB’s internal document).

What’s the big picture / tension here?

- Data protection regulations aim to give users more control over how companies use their data. Giving them more granular control of what types of information can or cannot be used for certain purposes means that all the information about each user needs to be labeled (or otherwise managed) so that it can be routed through various technological systems, filtered, added, combined, etc. An extremely simple example: “This user’s avatar picture may be shown publicly on their user profile but may not be indexed and displayed in user search, and must never be shown to these blocked accounts.”

- Facebook has an internal culture of extrem openness (permissiveness might be the better term), where lots of data is added to a giant pool and engineers and product teams can pull from it freely to build products and services. This makes it hard if not impossible to offer those types of granular controls. (Maybe even any kind of controls.)

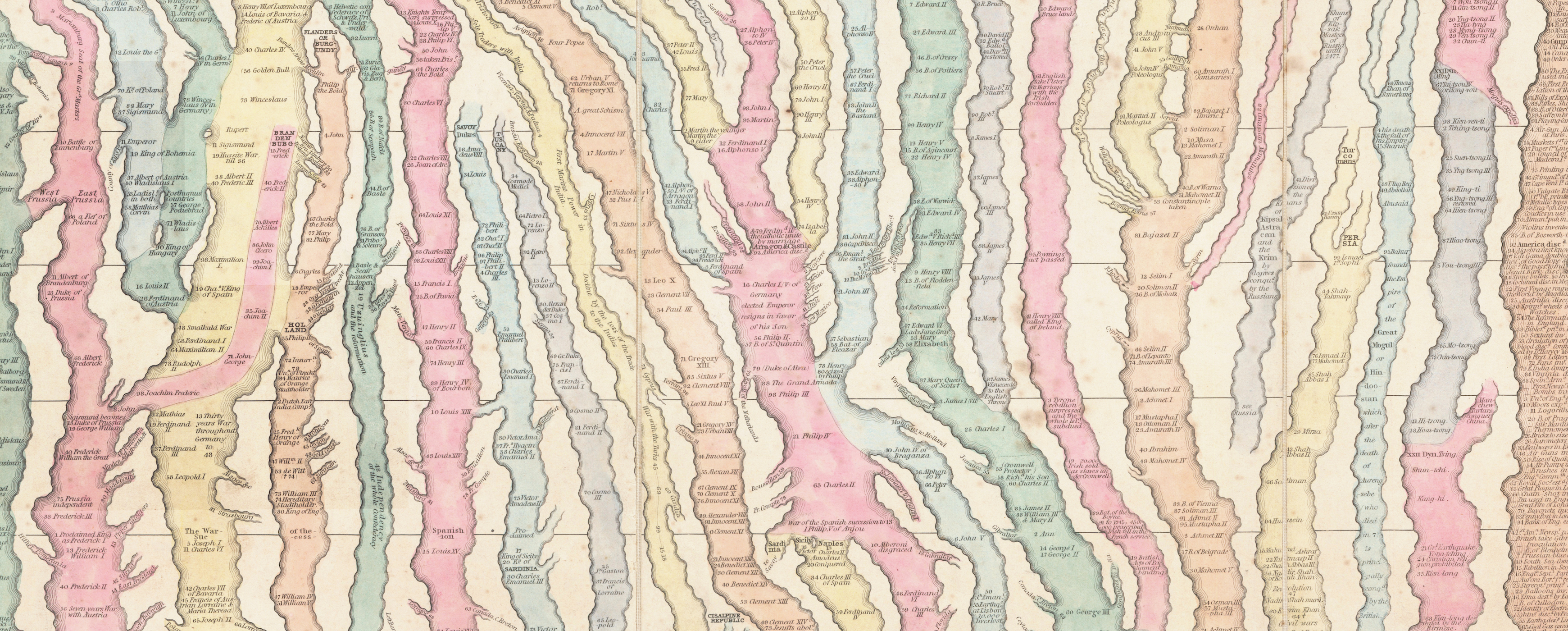

In their internal document, Facebook engineers make it clear that they see it as nearly impossible to protect data seeping from one of their internal systems to another, even if that should be illegal. They compare all their data as one open data lake into which everything flows, intermingles, and out of which everything flows again with limited means of control. (It’s worth noting that there’s a complicating factor: These new data handling regimes also must apply to the data already in the system.)

If true, and it sounds quite plausible, if would mean that FB is set up in a way that makes it impossible to comply with data protection laws at least in the EU.

Here’s a direct quote from the memo on FB’s general unpreparedness (highlights mine):

We’ve built systems with open borders. The result of these open systems and open culture is well described with an analogy: Imagine you hold a bottle of ink in your hand. This bottle of ink is a mixture of all kinds of user data (3PD, 1PD, SCD, Europe, etc.) You pour that ink into a lake of water (our open data systems; our open culture) … and it flows … everywhere,” the document read. “How do you put that ink back in the bottle? How do you organize it again, such that it only flows to the allowed places in the lake?”

Now that’s pretty alarming, if very much expected and in line with FB’s culture and past actions. The overall picture here is one where it would take years to get FB/Meta up to snuff on the “easy” stuff like their products, services and – maybe most notably – ads.

Really interesting is what’ll happen around machine learning and artificial intelligence. Here, the memo states:

“This is a new area for regulation and we are very likely to see novel requirements for several years to come. We have very low confidence that our solutios are sufficient.”

So yeah, this isn’t going to end well. That’s why it’s so important that we’re seeing this long-overdue regulatory pushback against the type of overreach we see manifested in tracking ads and dominant, monopolistic tech platforms.

Anyway.