Things don’t look too rosy for AI right now

A few things about the state of AI 2024 that taken together paint a less-than-rosy picture: (1) Ed Zitron makes a case — a pretty compelling one — that…

A few things about the state of AI 2024 that taken together paint a less-than-rosy picture: (1) Ed Zitron makes a case — a pretty compelling one — that…

How far into the future do you plan? There doesn’t seem to be a primary source for this other than a 1994 transcript of a lecture by Dutch…

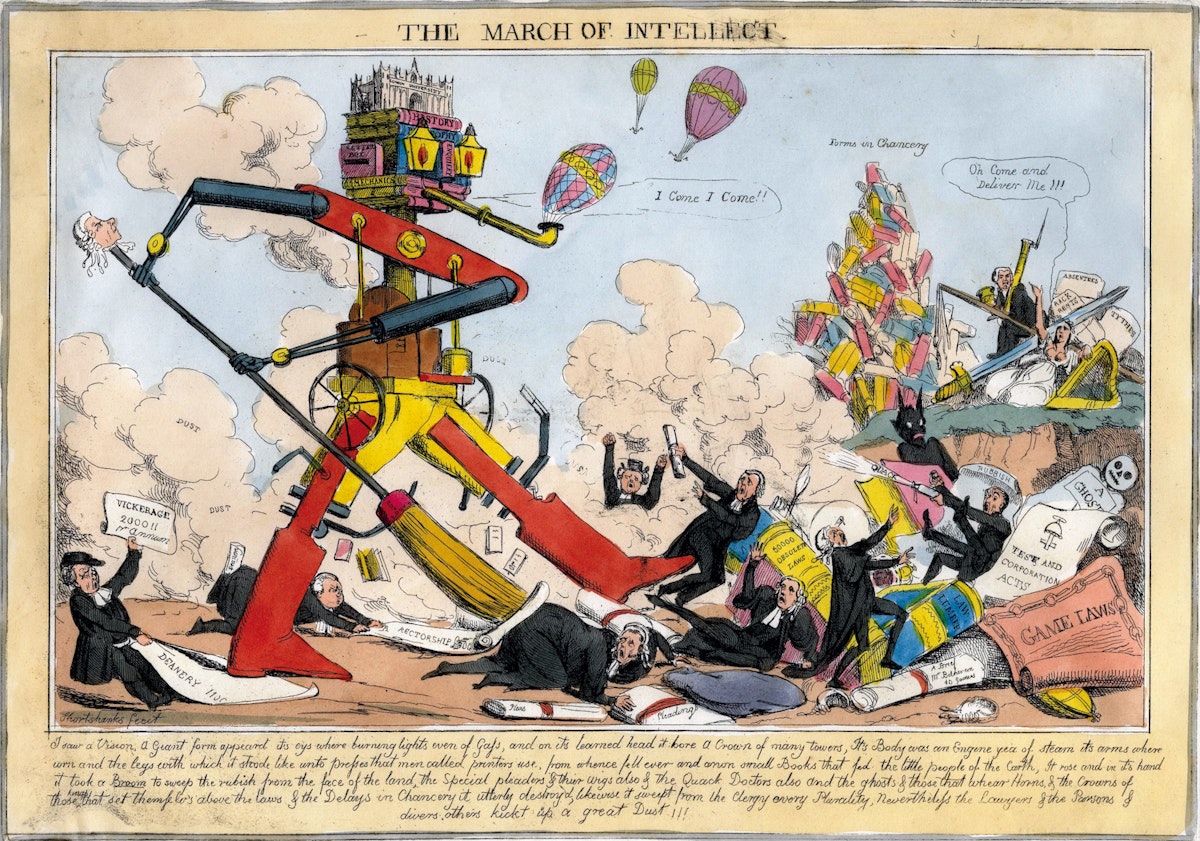

Tech is a sector that’s full of hype, and of utopian promises. Social media will revive democracy! AI will cure cancer and solve the climate crisis! Some of…

..and by insane, I mean insanely small compared to its impact. LobbyControl and Corporate Europe Observatory just published a great new report on Big Tech lobby spending. And…

Hey hey, While summer is having a roaring comeback here in Berlin, I’m mostly heads-down. I got a busy rest of the year ahead (which is good, of…

Hi there, I hope this catches you on vacation somewhere, or enjoying a slight summer lull in your busy schedule. In Berlin, where I’m based, the city seems…

In recent conversations, we repeatedly touched on the point that in some of the biggest societal challenges — specifically ongoing threats to democracy — tech appears to be…