Some thoughts after Mercator Forum

I’m on my way back home from Essen where I attended Mercator Forum, the foundation’s annual convening of its stakeholders, communities and grantee partners. This year it was…

I’m on my way back home from Essen where I attended Mercator Forum, the foundation’s annual convening of its stakeholders, communities and grantee partners. This year it was…

Note: This is cross-posted from my newsletter, to which you can subscribe here. x Here are some thoughts that have been kicking around my head for some time.…

Over on Threads I had an exchange about targeted ads: are they better than generic ads? Is ad tracking really bad? I see some real, relevant and big…

There are two major threat models to consider when discussing algorithmic decision-making systems (ADM) — which I’ll get to in a moment. The other day, when I spoke to…

It’s time to pull back the curtain on a project I’m extremely happy I’ve had the opportunity to work on for the last year or so: A new…

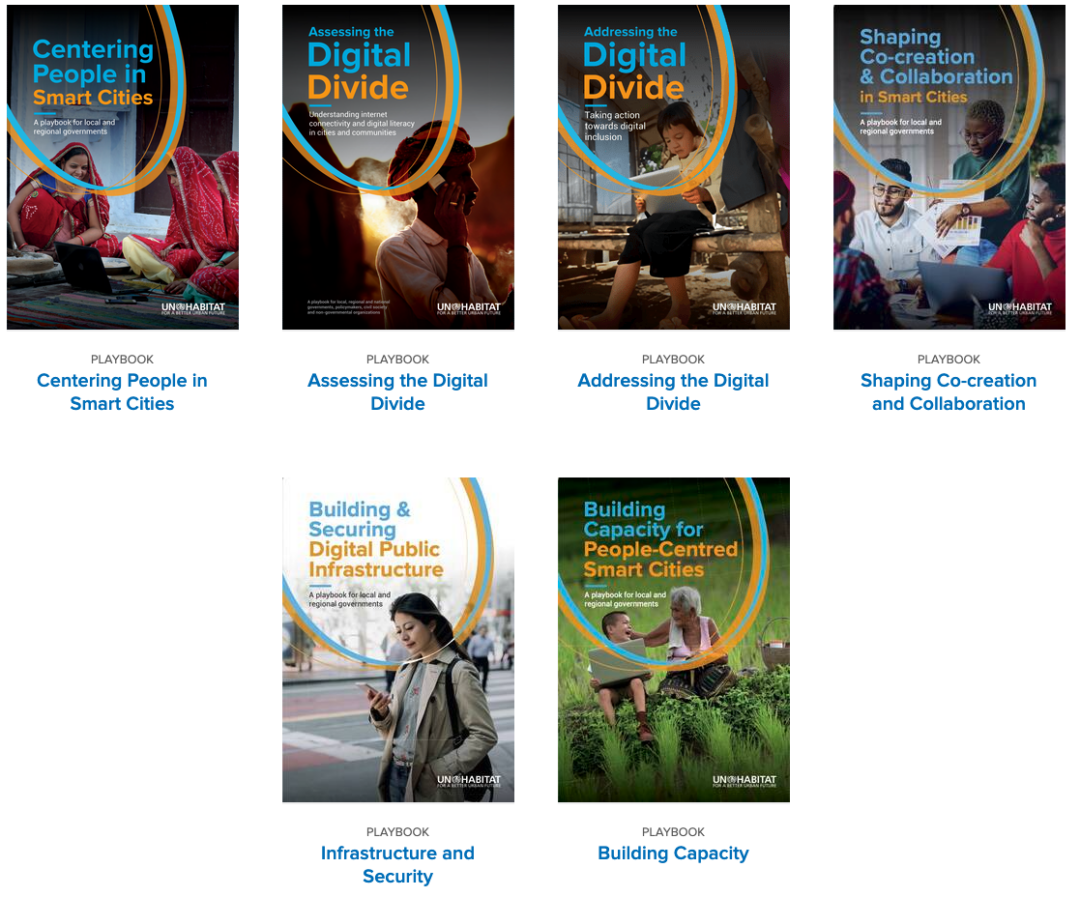

UN Habitat launched a new series of playbooks as part of its People Centered Smart Cities flagship program. I was happy to be a peer reviewer last year…

For the upcoming RightsCon, I’m co-hosting a joint session with Stiftung Mercator (who I work with) and European AI Fund (who I served as interim director for recently,…