Things that caught my attention

A few things that caught my attention over the last week or so. Some of them appear pretty wild even for 21st century standards, but I guess that’s…

A few things that caught my attention over the last week or so. Some of them appear pretty wild even for 21st century standards, but I guess that’s…

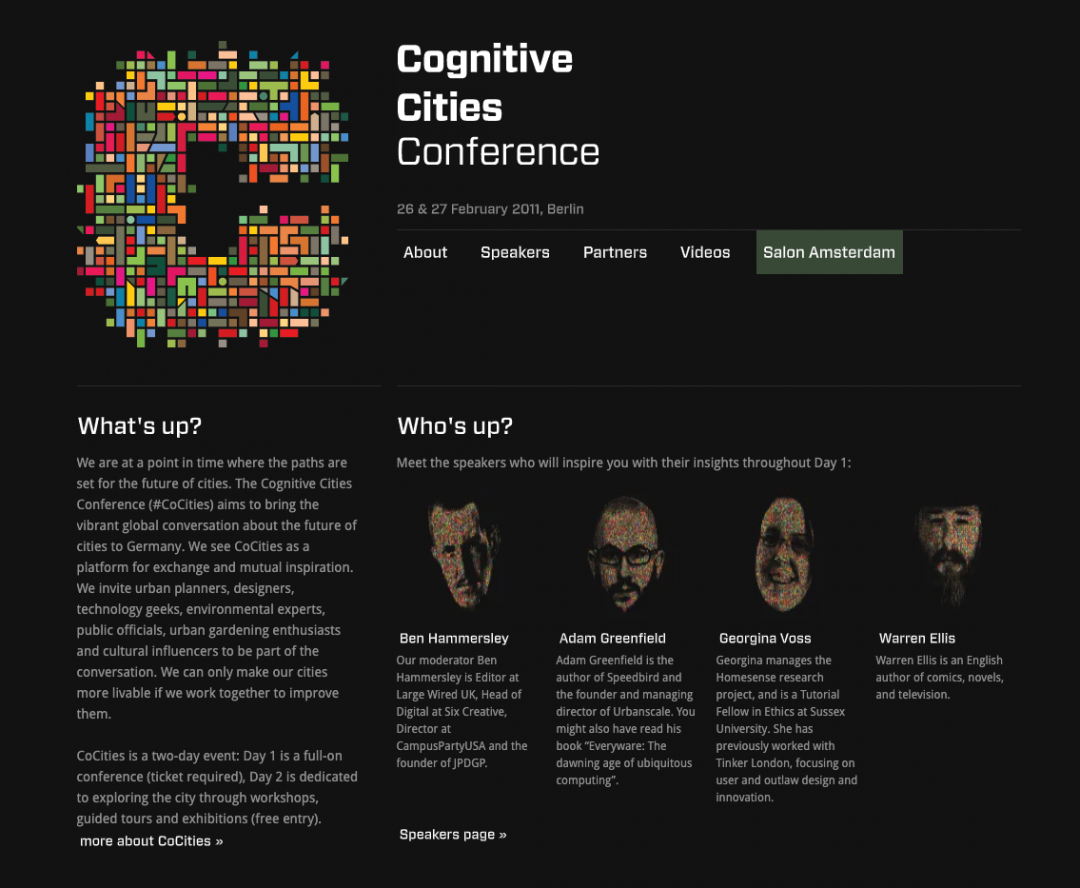

10 years ago exactly, a group of us gathered in Berlin for Cognitive Cities Conference, a 2011 event around the implications of smart cities that I was involved…

For years now, we’ve discussed variations of the question “who owns my data?” And alas, we didn’t get very far, for several reasons: The most economic value of…

Over at ThingsCon, I’m stepping down from my seat on the board of directors. Below, I’m including the official blog post announcement from ThingsCon. But first I’d like…

If you read this, chances are you spend a significant amount of your day in meetings — these days, likely video meetings. During the first lockdown, it seemed like…

After reading Monique van Dusseldorp’s most excellent new newsletter on the future of (hybrid) events, I re-watched this Travis Scott x Fortnite video from April 2020. At the…

We’ve been seeing regulators around the world taking a strong stance against Big Tech. From China blocking one of the world’s largest IPOs to the anti-trust moves against…