Refractive Fragmentation — where did social media go?

“An X never, ever marks the spot,” Indiana Jones once famously stated. Only in this one particular case, it totally does: When Twitter became X, it very much…

“An X never, ever marks the spot,” Indiana Jones once famously stated. Only in this one particular case, it totally does: When Twitter became X, it very much…

We know the many ways social media platforms are failing us. But/and I think it would be tremendously helpful to expand our vocabulary and thinking about these things…

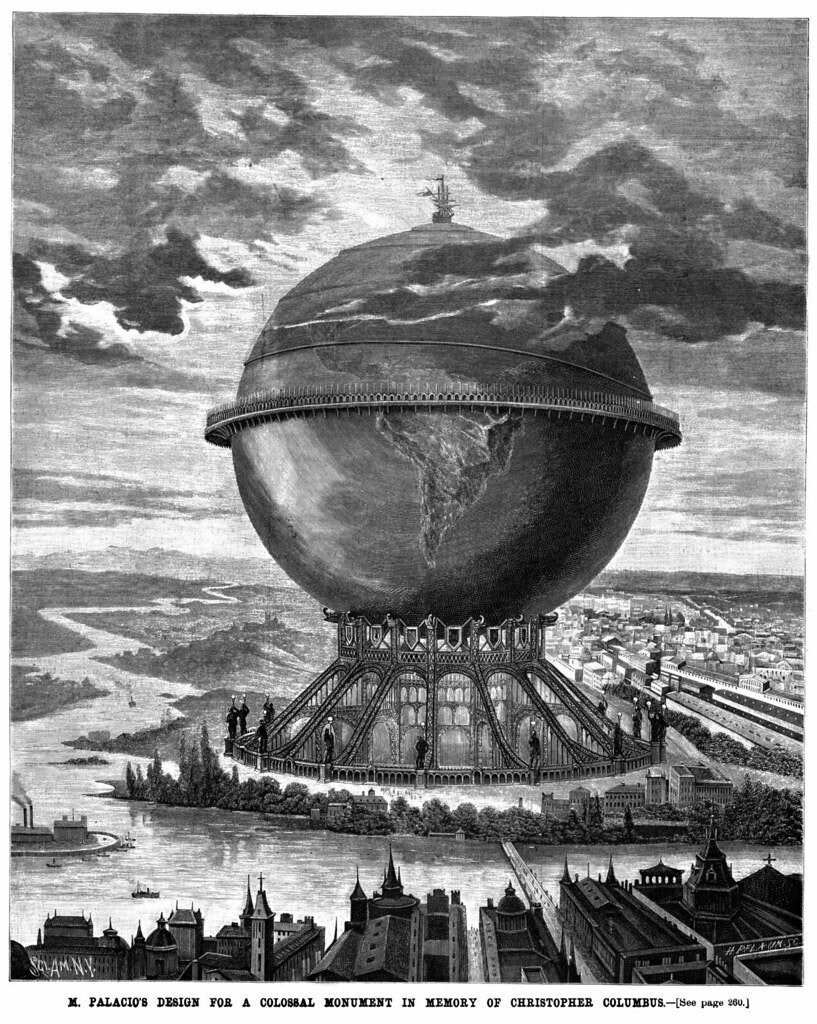

Tech is a sector that’s full of hype, and of utopian promises. Social media will revive democracy! AI will cure cancer and solve the climate crisis! Some of…

A quick transparency update: I’m happy to share that I’ve recently started working with Civitates, a philanthropic initiative for democracy and solidarity in Europe. In Civitates’ own words:…

In recent conversations, we repeatedly touched on the point that in some of the biggest societal challenges — specifically ongoing threats to democracy — tech appears to be…

After a brief engagement last year for a strategy process, I’m excited to be working more intensely with Sovereign Tech Fund, a publicly funded program to strengthen public…

A classic lens to prob the potential impact of a new technology is to explore that technology from adversarial points of view: What might a criminal do with…