On the Buxton Index

How far into the future do you plan? There doesn’t seem to be a primary source for this other than a 1994 transcript of a lecture by Dutch…

How far into the future do you plan? There doesn’t seem to be a primary source for this other than a 1994 transcript of a lecture by Dutch…

In gaming, the term sherpa refers to a person who is an experienced gamer and helps out newer players get through hard levels. This is not purely a guiding…

Two things side by side about scale that caught my eye: One: Over at SNV, Julian Jaursch published a paper (English, German) on EU platform regulation, or more…

Just a quick shout-out to an older — dare I say classic? — publication by now-defunct British NGO Doteveryone. Like a great many things Doteveryone published throughout their few…

A lot of my work is helping orgs (and more importantly, people) navigate through transitions of some kind or another: The complexity and uncertainty of a world changed…

The New York City CTO team recently reached out to me for some feedback on the new NYC IoT Strategy, which of course I gladly contributed. And I’m…

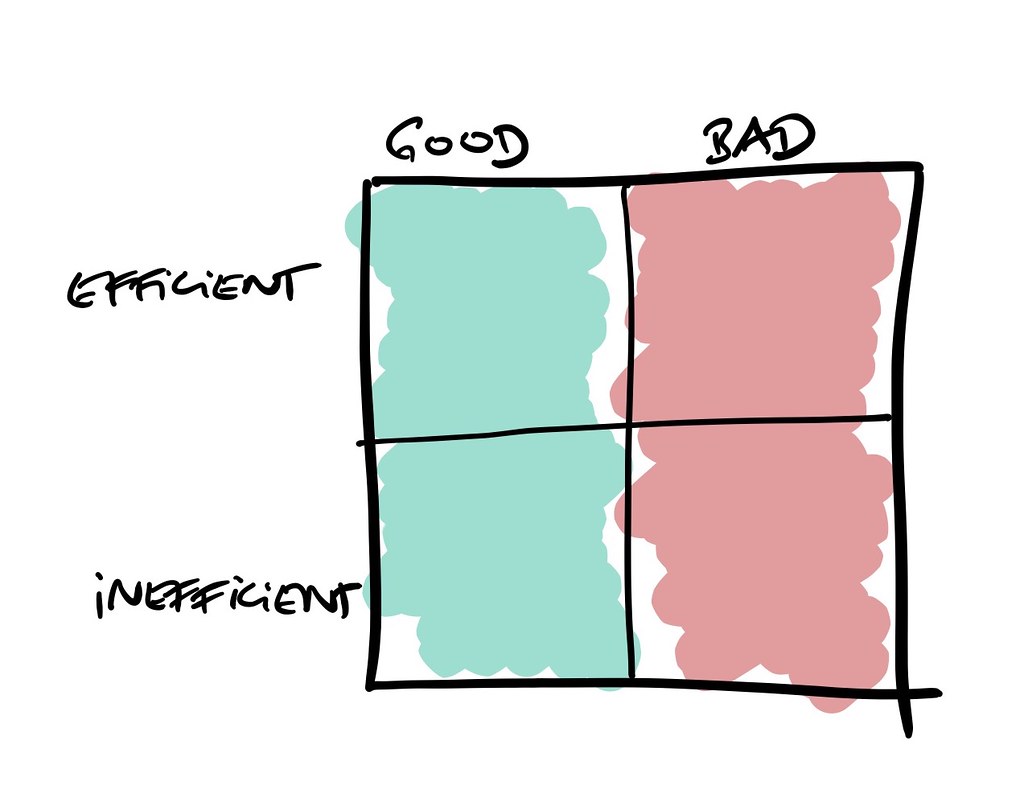

I’m reading Kim Stanley Robinson’s Ministry for the Future, which is all about climate change. In it, the author riffs a bit on the role of efficiencies and…