For a while now I’ve had the chance to experiment a little with DALL-E 2, Open AI’s new AI system that “can create realistic images and art from a description in natural language.”

Not to spoil anything, but it’s quite wild. AI, and AI image generation from language, are pretty involved topics which I can only scratch the surface of. That said, it’s interesting to kick the tires on those systems to get a better feel for how they work, how they fail — and maybe most importantly, how they unintentionally produce surprising and interesting things.

The basics first: The way the system works under the hood, in the words of OpenAI:

“DALL-E 2 has learned the relationship between images and the text used to describe them. It uses a process called “diffusion,” which starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image.”

In effect, the system tries to find commonalities between terms used online to describe images and the images themselves, and then produces new variations based on the patterns it finds. It “learns” what a cat is described as and infers what a cat “is”. The system tries to figure out catness, if you will.

The way DALL-E 2 is used is pretty straightforward, though: You provide a text prompt, a description fo what you’d like to see. The system then tries to match this description with what it learned and creates that thing. A hint offered by OpenAI is to give a description of what you would like to see generated plus a hint about the how, that is the style in which it should be generated. An example given by the developers: “An astronaut riding a horse in the style of Any Warhol.”

So I’ve been feeding text prompts into the system to see what it would spit out.

Let’s see what we learned, shall we?

All the following images were generated by DALL-E 2.

Something absurd: An avocado that’s also a friendly cat:

So far, so good. This worked.

What about a pirate flag where the skull is a slice of pizza?

Not a pirate flag, but there’s something there.

How about a party in the metaverse as a modernist oil painting?

Or a cyberpunk scene, a busy rainy neon-lit street in the style of cyberpunk?

This was interesting: This totally gets the vibe of cyberpunk imagery but this isn’t a busy street at all. There’s no discernible life visible at all, and if you look closely, things are starting to get a little weird. The images don’t quite resolve. This is something we’ll notice more and more: At a glance the images look plausible, but look closer and things start falling apart a little.

For example, look at words:

A STOP sign is generated perfectly. It doesn’t matter if you prompt DALL-E to generate “a stop sign” or “a sign that says STOP”, either work really well. I assume it’s because it doesn’t actually spell the word STOP but rather just works off of a bajillion photos of discrete STOP signs.

How about generating a non-existing sign? Maybe one that says “hallelujah”?

Text generation doesn’t work well, at all. There are letters, yes, but there are no words. This I found surprising, and I’d love to learn how/why the system doesn’t do letters better. But if the system works purely from visual cues it’s explainable; I would have expected, perhapes naively, that an AI system based on text-image relationships would by default have text generation built in, but it clearly doesn’t.

Speaking of things falling apart, how about smashing some things together that usually aren’t combined? Things like toys and cyberpunk:

Again, pretty good, but look more closely and things get interesting. Hands and faces is where this is most obvious: I believe this has two reasons. One, they’re hard to get right. Two, humans are primed to really parse faces and our brain stumbles over any oddness there. If a car or wall in the background are weird, or a jacket, our brain fills in the gaps and glosses over inconsistencies. But faces (and letters, as we’ll see later), not so much.

How about some stock images? Here’s what DALL-E offered for the term smart city:

For my work, I look at a lot of smart city images and these, in their generic sterile blue-ness wouldn’t stand out even a little. These look like perfect emulations of the imagery I see in reports and presentations every day.

Does this mean DALL-E is really good or does this mean that the smart city industry uses incredibly bland and generic images? Maybe a bit of both. Something to keep in mind: If AI generates plausible versions of things, it always gives us a hint about how special the “original” (human-made) version of that thing is. (AI-generated sports and financial market news pieces are notoriously hard to differentiate from human-written ones because those forms of news are incredibly generic.) But I digress! Now comes the fun part.

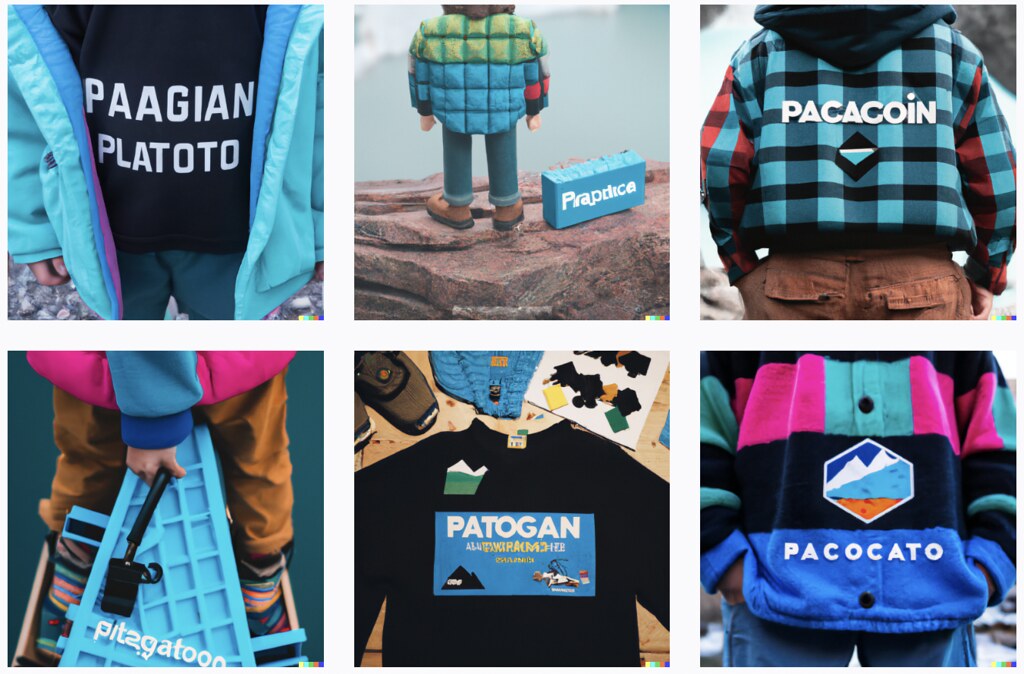

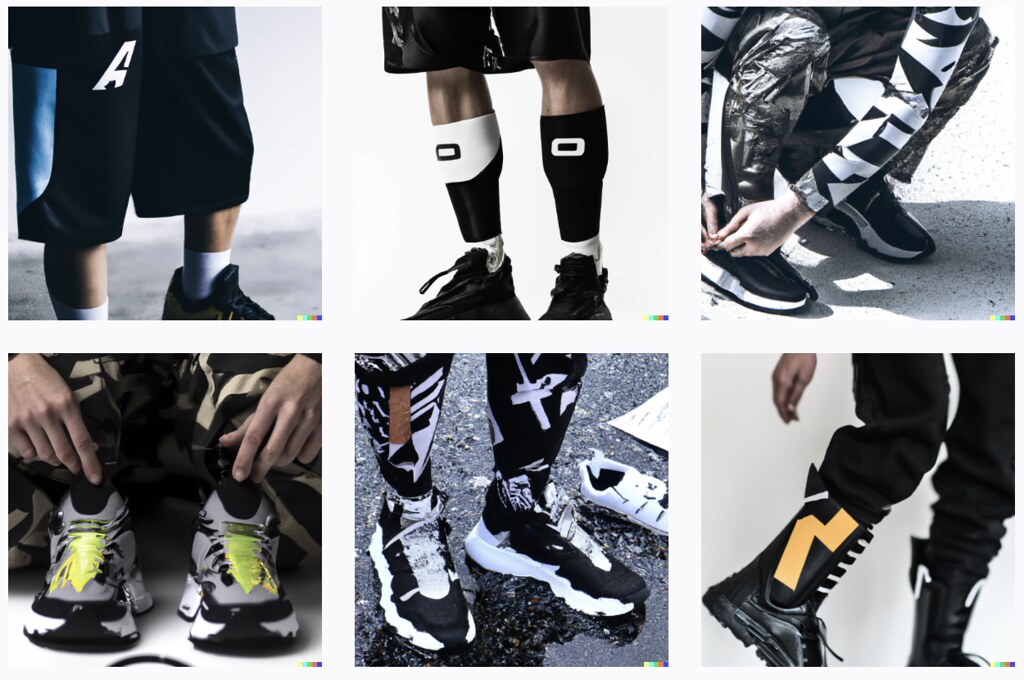

Through Derek Guy on Twitter, I was turned onto the world of “brand collaborations” generated by DALL-E.

Now, remember, these aren’t real, this is completely made up by DALL-E based on existing images and text descriptions. And it’s fascinating. I basically just combined two or so brand names and asked for a “lookbook of _____ x _____ collab” and these came out

I’ll leave it to you to guess which virtual “brands” were involved:

So, those were really fascinating to see come together. Before thinking about the results, once more: These are fully generated based on text prompts, no actual brands were involved in this. (No human models either, obviously.)

I’d encourage you to take a moment and zoom in on some of those pictures. What do you notice? Here’s what stood out to me:

- The overall quality of the generated output is massively impressive. There are odd bits and pieces, but so much of this looks amazing and has an impressive fidelity.

- There is a surprising level and consistency of “brandness” in some of those, especially if they’re large, popular, well documented in photos online. Some of these results, even without logos or proper text, seem surprisingly plausible.

- Logos are mostly not replicated. Most logos aren’t real (which is probably a good thing from a brand infringement point of view; infringement is also the reason DALL-E explicitly prohibits generating images based on real people’s likeness.)

- Details are often weird. Look at those feet – the number of toes! Those aren’t real feet!

Anyway: Fascinating.