At their Pixel 2 event at the beginning of the month, Google released a whole slew of new products. Besides new phones there were updated version of their smart home hub, Google Home, and some new types of product altogether.

I don’t usually write about product launches, but this event has me excited about new tech for the first time in a long time. Why? Because some aspects stood out as they stand for a larger shift in the industry: The new role of artificial intelligence (AI) as it seeps into consumer goods.

Google have been reframing themselves from a mobile first to an AI first company for the last year or so. (For full transparency I should add that I’ve worked with Google occasionally in the recent past, but everything discussed here is of course publicly available.)

We now see this shift of focus play out as it manifests in products.

Here’s Google CEO Sundar Pichai at the opening of Google’s Pixel 2 event:

We’re excited by the shift from a mobile-first to an AI-first world. It is not just about applying machine learning in our products, but it’s radically re-thinking how computing should work. (…) We’re really excited by this shift, and that’s why we’re here today. We’ve been working on software and hardware together because that’s the best way to drive the shifts in computing forward. But we think we’re in the unique moment in time where we can bring the unique combination of AI, and software, and hardware to bring the different perspective to solving problems for users. We’re very confident about our approach here because we’re at the forefront of driving the shifts with AI.

Before diving into some structural thoughts, let’s look at two specific products they launched:

- Google Clips are a camera you can clip somewhere, and it’ll automatically take photos when some conditions are met: A certain person’s face is in the picture, or they are smiling. It’s an odd product for sure, but here’s the thing: It’s fully machine learning powered facial recognition, and the computing happens on the device. This is remarkable for its incredible technical achievement, and for its approach. Google has become a company of high centralization—the bane of cloud computing, I’d lament. Google Clips works at the edge, decentralized. This is powerful, and I hope it inspires a new generation of IoT products that embrace decentralization.

- Google’s new in-ear headphones offer live translation. That’s right: These headphones should be able to allow for multi-language human-to-human live conversations. (This happens in the cloud, not locally.) Now how well this works in practice remains to be seen, and surely you wouldn’t want to run a work meeting through them. But even if it eases travel related helplessness just a bit it’d be a big deal.

So as we see these new products roll out, the actual potential becomes much more graspable. There’s a shape emerging from the fog: Google may not really be AI first just yet, but they certainly have made good progress on AI-leveraged services.

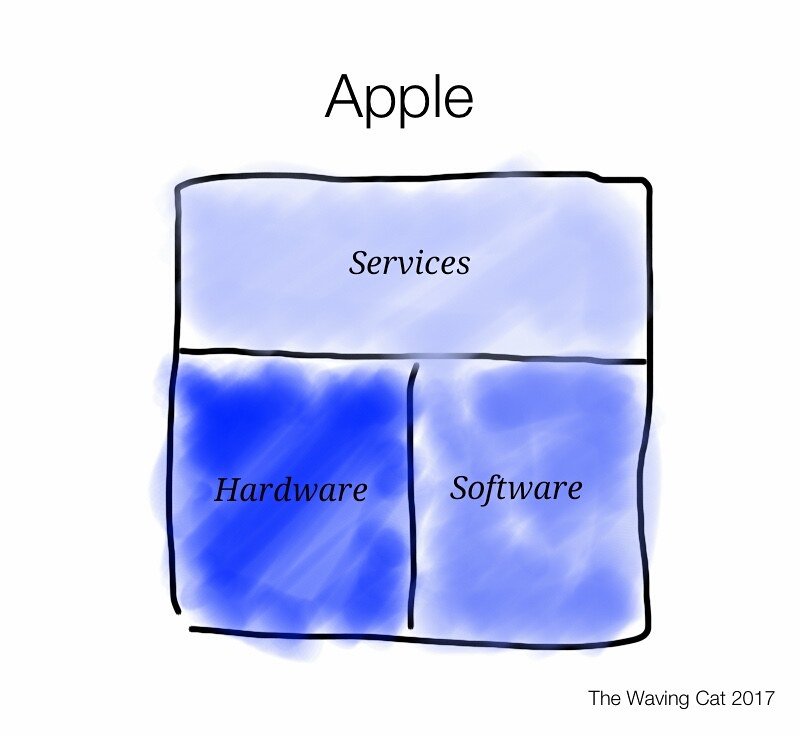

The mental model I’m using for how Apple and Google compare is this:

Apple’s ecosystem focuses on an integration: Hardware (phones, laptops) and software (OSX, iOS) are both highly integrated, and services are built on top. This allows for consistent service delivery and for pushing the limits of hardware and software alike, and most importantly for Apple’s bottom line allows to sell hardware that’s differentiated by software and services: Nobody else is allowed to make an iPhone.

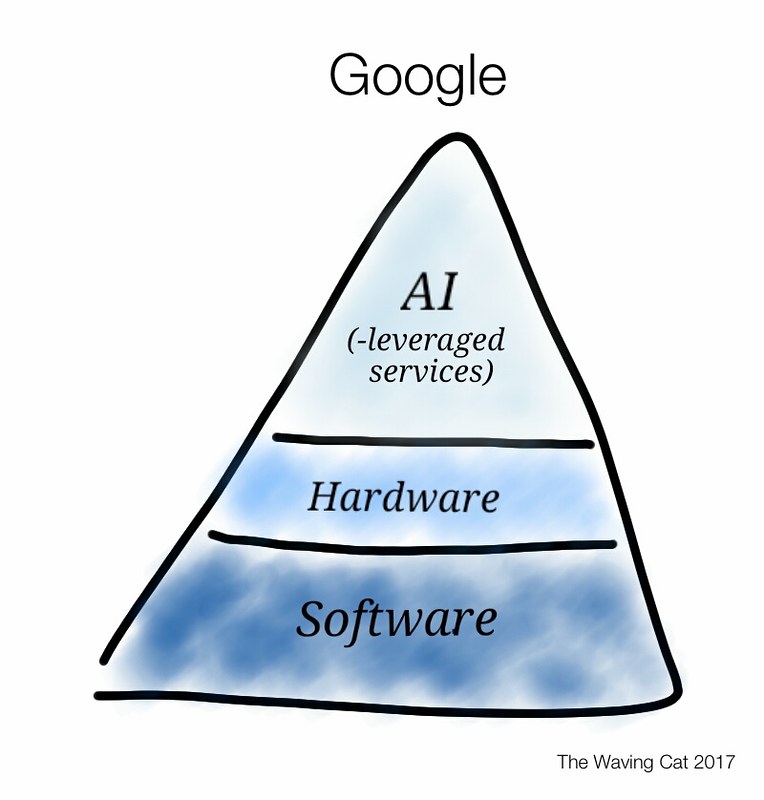

Google started at the opposite side, with software (web search, then Android). Today, Google looks something like this:

Based on software (search/discovery, plus Android) now there’s also hardware that’s more integrated. Note that Android is still the biggest smartphone platform as well as basis for lots of connected products, so Google’s hardware isn’t the only game in town. How this works out with partners over time remains to be seen. That said, this new structure means Google can push its software capabilities to the limits through their own hardware (phones, smart home hubs, headphones, etc.) and then aim for the stars with AI-leveraged services in a way I don’t think we’ll see from competitors anytime soon.

What we’ve seen so far is the very tip of the iceberg: As Google keeps investing in AI and exploring the applications enabled by machine learning, this top layer should become exponentially more interesting: They develop not just the concrete services we see in action, but also use AI to build their new models, and open up AI as a service for other organizations. It’s a triple AI ecosystem play that should reinforce itself and hence gather more steam the more it’s used.

This offers tremendous opportunities and challenges. So while it’s exciting to see this unfold, we need to get our policies ready for futures with AI.

Please note that this is cross-posted from Medium. Disclosure: I’ve worked with Google a few times in the recent past.